Learning content for GEN AI from google/kaggle

All the resources of the course are present in Notion Notebook and published: Notion-Site

- Also placed all notebooks and learning resources in git repo, I will use the resource links from my own repo so that we will have control over the resource links and expiry : repo - link

This blog contains the details and summary of the learnings and exercises from this intensive 5 day course and also provide highlights out of it.

Week 1

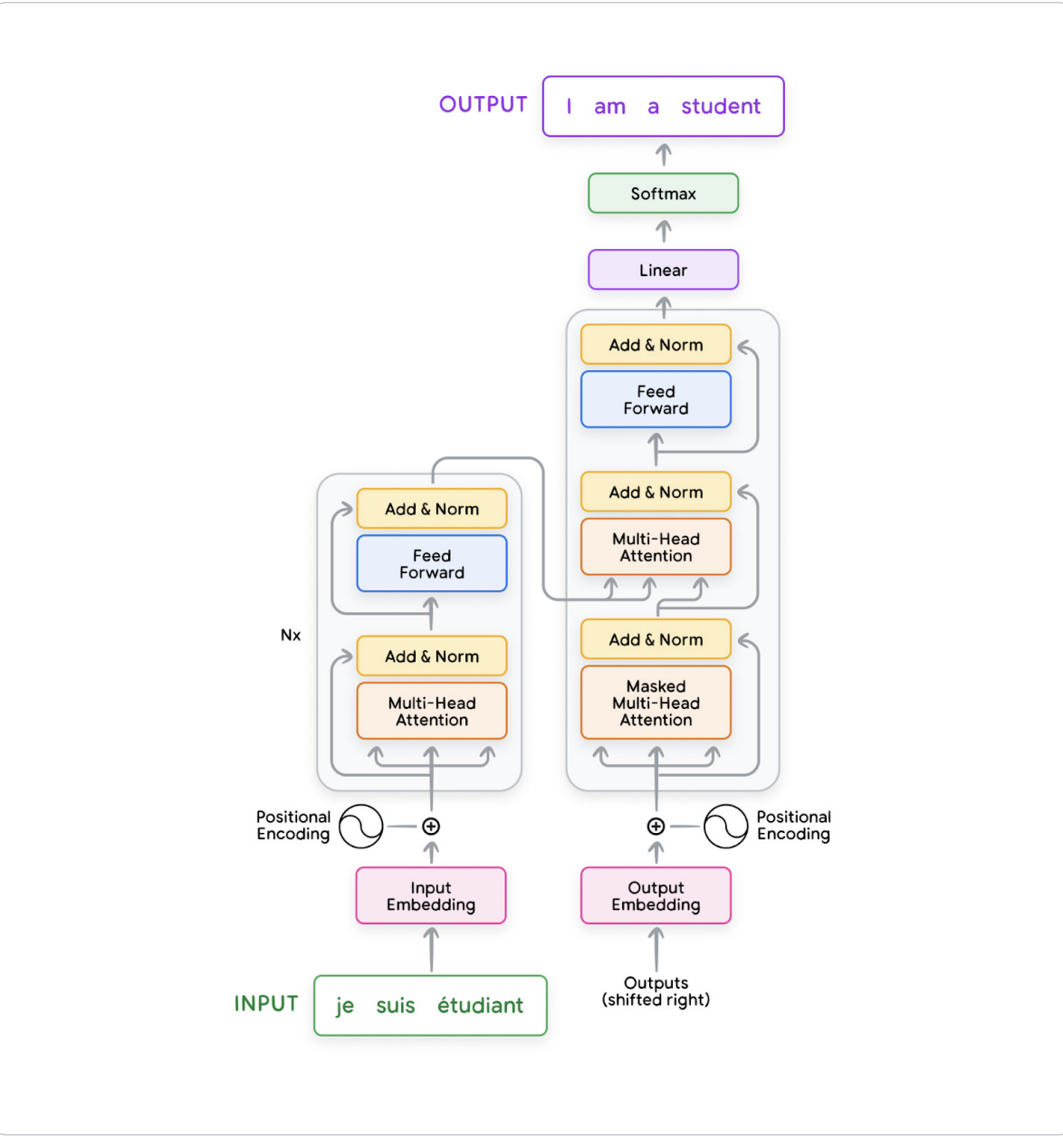

- Week1 reading content contains what are foundations language models, basics on transformers , GPT evolution.

- Also contains intros to concepts like fine-tuning prompt-engineering and applications.

-

Week1 contains two white papers another contains exclusive content on prompt engineering.

White paper : Foundational Large Language Models & Text Generation

-

Summary and understanding from this paper

- How the initial models like RNNs had limitation in look back and training needs to be done sequentially

- there were also GRUs (Gated recurrent Units) and LSTM (Long short-term memory) with same limitations

- Before continuing further going through a playlist created by 3Blue1Brown

- This playlist contains visual references of core concepts on building a neural network.

- Continuing to explore the playlist and other already existing resources in youtube to get indepth understanding

- Keeping all the resources in one place - Notion and publishing for others to leverage these resources: Notion Page